Jeff Man

Jeff Man, is an Information Security advocate, InfoSec Curmudgeon, PCI QSA; Trusted Advisor and speaker with over 40 years of experience in cryptography, risk management, information security and penetration testing. As a National Security Agency cryptanalyst, Jeff invented the "whiz" wheel, a cryptologic cipher wheel used by US Special Forces as well as the first software-based cryptosystem produced by the NSA and pioneered the agency’s first “red team”. Jeff actively shares his unique and valuable knowledge as an international speaker, author contributor to Tribe of Hackers and podcast host for Paul’s Security Weekly. He gives back to the industry through sitting on advisory boards as well as inspiring youth through diversity, equity and inclusion initiatives and mentorship. Jeff remains a driving force in the industry, bringing his deep expertise and no-nonsense approach to his role as a PCI QSA and Trusted Advisor at Online Business Systems.

![]() One of the most significant changes introduced in PCI DSS v4.0 involves the documented approach for performing internal vulnerability scans. The internal vulnerability scanning requirement (now 11.3.1) contains more explicit details intended to provide clarification of the requirement:

One of the most significant changes introduced in PCI DSS v4.0 involves the documented approach for performing internal vulnerability scans. The internal vulnerability scanning requirement (now 11.3.1) contains more explicit details intended to provide clarification of the requirement:

On March 31st, 2022 PCI DSS v4.0 was released. Today’s post is part of a series of pieces we are publishing that explore the changes to the PCI standard and provides insight into what the changes will mean for your organization. All of our posts can be found here.

One of the most significant changes introduced in PCI DSS v4.0 involves the documented approach for performing internal vulnerability scans. The internal vulnerability scanning requirement (now 11.3.1) contains more explicit details intended to provide clarification of the requirement:

- At least once every three months.

- Formerly “four quarterly vulnerability scans”

- Formerly “four quarterly vulnerability scans”

- High-risk and critical vulnerabilities (per the entity’s vulnerability risk rankings defined in Requirement 6.3.1) are resolved.

- Formerly “high-risk vulnerabilities”; formerly “addressed”

- Formerly “high-risk vulnerabilities”; formerly “addressed”

- Rescans are performed that confirm all high-risk and critical vulnerabilities (as noted above) have been resolved.

- Formerly “high-risk vulnerabilities”

- Formerly “high-risk vulnerabilities”

- Scan tool is kept up to date with latest vulnerability information. new

- Scans are performed by qualified personnel and organizational independence of the tester exists.

However, the big changes are found in the following sub-requirements:

11.3.1.1 All other applicable vulnerabilities (those not ranked as high-risk or critical (per the entity’s vulnerability risk rankings defined at Requirement 6.3.1) are managed as follows:

- Addressed based on the risk defined in the entity’s targeted risk analysis, which is performed according to all elements specified in Requirement 12.3.1.

- Rescans are conducted as needed.

11.3.1.2 Internal vulnerability scans are performed via authenticated scanning as follows:

- Systems that are unable to accept credentials for authenticated scanning are documented.

- Sufficient privileges are used for those systems that accept credentials for scanning.

- If accounts used for authenticated scanning can be used for interactive login, they are managed in accordance with Requirement 8.2.2.

This requirement is a best practice until 31 March 2025, after which it will be required and must be fully considered during a PCI DSS assessment.

Let’s summarize the new aspects of the vulnerability scanning requirements, 11.3.1 -11.3.1.3, and provide clarification based on my experiences as a QSA.

- At least once every three months – This attempts to provide clarity to those entities that believe quarterly scanning could be “once within a quarter” meaning they could scan in January for Q1 and June for Q2 and still be adhering to the requirement. Nope.

- High-risk and critical vulnerabilities are resolved – The implied meaning of the criteria was always “high-risk and higher” but sometimes it’s better to put it in writing to help clarify the meaning.

- Rescans are performed that confirm all high-risk and critical vulnerabilities (as noted above) have been resolved – Added the “and critical” to be consistent with the initial scan. Bottom line – fix it!

- Scan tool is kept up to date with latest vulnerability information – This is an apparent nod to the scan vendors these days that tout themselves as vulnerability management solutions and want you to rely on scanning for the identification of vulnerabilities (per PCI DSS 6.3.1). The more direct interpretation is that updated scan engines will find the newest vulnerabilities.

- All other applicable vulnerabilities are managed – This means you can no longer ignore informational, low, or medium risk findings. You don’t necessarily need to resolve them, but you do need to explicitly acknowledge they are there, have a potential impact, and demonstrate that you have considered the overall risk of the vulnerability remaining in your environment.

- Internal vulnerability scans are performed via authenticated scanning – Wait, what? That’s right. Your scan that used to have a handful of low-risk findings is about to contain hundreds, maybe thousands of findings that you will have to address.

The requirement for authenticated scanning is the biggest change and deserves a closer look.

The ability for a vulnerability scan to remotely log in to a system using credentials has existed in the major scan engines since about the time the first PCI DSS was published back in 2004. The rationale for performing a scan by actually logging into the target system is pretty simple – you get better, more accurate results because you can actually see what’s running on the target system.

Remember, the way that vulnerability scanners ‘detect’ vulnerabilities is by querying a target on multiple TCP/IP ports and seeing what type of response is given. Vulnerabilities are discovered based on the services that respond to queries and how they respond. Often the vulnerabilities are guesses based on assumptions – “if you’re running the XYZ service there are the following issues with XYZ service.” The service may or may not be running, it may be running a version that has already been patched or fixed, or it may in fact be vulnerable. These educated guesses sometimes result in what is called a “false positive” and those responsible for fixing the problems have to provide evidence that proves the false positive – which is often a very tedious exercise.

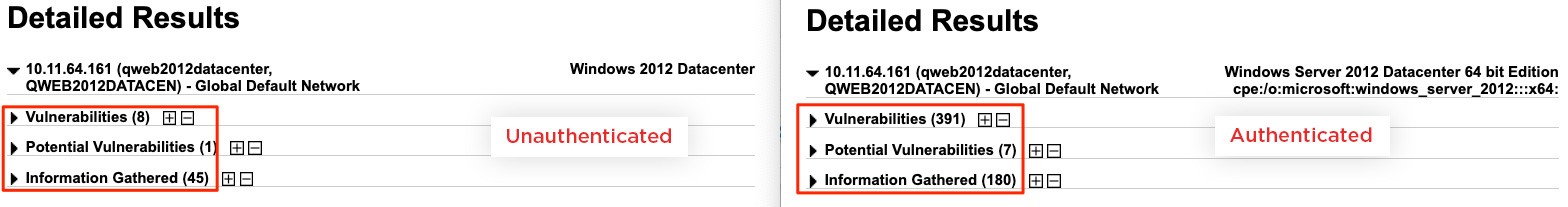

The promise of an authenticated scan is that false positives are greatly reduced because the guessing/guilt-by-association element of scan findings is eliminated. Authenticated scans also yield more findings because they are getting a truer snapshot of the targeted system. A Qualys blog on “Unified Vulnerability View of Unauthenticated and Agent Scans” provided some side-by-side results:

Figure 3: Unauthenticated vs. Authenticated Scan Results

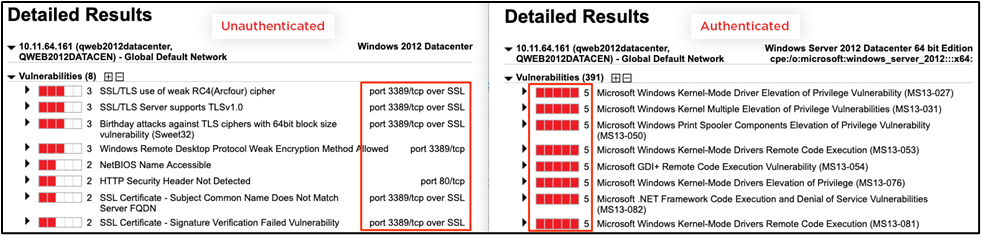

What is even more compelling in terms of using authenticated scans is how they reveal more severe vulnerabilities that expose the entity to compromise.

Figure 4: Detailed Findings of Both Scans

The ability to detect more accurate vulnerabilities, uncover more critical findings and more comprehensive results seems like a no-brainer. Yet, this capability is not universally accepted or practiced, particularly by entities that are subject to PCI DSS compliance. The paradox is that too many companies want to find fewer results – so they have less cleanup and remediation work to do – rather than find more accurate and impactful results.

This is why this new requirement is expected to be so disruptive and why the Council is giving entities nearly three years to put it fully in place.

Of course, you will want to try an authenticated scan and scan early and often to determine how many more findings are discovered, and remember that ALL of the findings are now either to be mitigated or addressed according to the ‘new rules’ found in the PCI DSS v4.0.

If you’re not doing so already, pay close attention to the requirement (new: 6.3.1/old: 6.2.1) for developing a risk ranking (remember this has been required since PCI DSS v2.0). There is new guidance for this requirement that states:

“Note: Risk rankings should be based on industry best practices as well as consideration of potential impact. For example, criteria for ranking vulnerabilities may include consideration of the CVSS base score, and/or the classification by the vendor, and/or type of systems affected.

Methods for evaluating vulnerabilities and assigning risk ratings will vary based on an organization’s environment and risk-assessment strategy. Risk rankings should, at a minimum, identify all vulnerabilities considered to be a “high risk” to the environment. In addition to the risk ranking, vulnerabilities may be considered “critical” if they pose an imminent threat to the environment, impact critical systems, and/or would result in a potential compromise if not addressed. Examples of critical systems may include security systems, public-facing devices and systems, databases, and other systems that store, process, or transmit cardholder data.”

| While conducting the research for this article, I spoke to colleagues at the “big three” vendors – Qualys, Rapid7, and Tenable. Besides the question of “which type of scan is better?”, I asked the question, “does each type of scan provide the same results?” They all acknowledged that there are some conditions that can only be effectively tested from an external viewpoint. Meaning that while an authenticated scan gives you better results of what is/isn’t a vulnerability on the targeted system it doesn’t report all the valid findings of an unauthenticated scan. Everyone agreed that the best option is to perform both methods of scanning. |

The biggest hurdle for those entities that take a minimalist approach to scanning, security, and PCI in general, will be to address all the new vulnerabilities that are about to be discovered in their environments. There are those entities that properly embrace the security goals of PCI DSS already so they should have fewer issues and surprises; they might already be taking advantage of authenticated scans.

To summarize, entities need to perform authenticated/credentialed scanning, considered to be more thorough, which will yield more accurate findings (emphasis on “more”) and they will also need to address all the findings not just “high-risk” and “critical” findings.

To view the complete Vulnerability Scanning eBook, click here.

Online is ready to assist you in developing your PCI program, helping unpack what the v4.0 changes will mean for your organization, and then designing a compliance roadmap to get you there.

For additional insight and guidance from Online’s QSA team, explore our digital PCI DSS v4.0 Resource Center where we have identified and dissected many of the significant changes and new requirements in the latest release of the PCI Standard.

Submit a Comment