**UPDATED NOVEMBER 24**

It's hard to believe that one of our first virtual environments was designed and developed to be the set of a virtual video, almost 6 months ago! In the land of tech, a lot can happen in that amount of time.

Since June, the metaverse and related topics such as AR/VR, blockchain, web3, digital twins, and NFTs have seen incredible advancements, applications, and adoption rates across the globe.

As the Innovation Lab of a digital transformation and security consultancy, we are constantly investigating the ways that our clients can use emerging tech to unlock new business opportunities.

Occasionally, a project makes its way to our lab bench that is unlike any other we’ve seen.

We have nothing to compare it to and there is no “how-to guide” on how to effectively solution it. We were recently given a unique challenge to respond to....

The Task: Produce, direct, & edit a corporate video announcement that showcases novel technology and is accessible via 2D and 3D devices, within a few weeks.

-Challenge accepted.

A team of a dozen experts in our Innovation Lab are currently working to determine the impact of the Metaverse on our clients and our organization of 400+. This team is actively honing their skills and developing IP to help our clients create new experiences in the Metaverse.

We have various projects underway, ranging from virtual world development, VR data visualization, employee engagement tools, to DeFi using blockchain and smart contracts.

We've integrated intelligent bot capabilities to service users of augmented reality apps, and are testing the capabilities of Large Language Models and generative AI in general to solve some of the tasks our clients would like to automate. Natural Language will be the primary mode of interaction with most immersive environments and is the interface for most of what we do as knowledge workers.

"Why is the #metaverse inevitable? It’s in our DNA. The human organism evolved to understand our world through first-person experiences in #spatial environments. It’s how we interact and explore. It’s how we store memories and build mental models. It’s how we generate wisdom and develop intuition." - Dr. Louis Rosenberg

In the Innovation Lab we research and apply novel tech to create new business possibilities. The corporate announcement task was not simply to record a video but to also build the space for the shoot to take place in. This video was important because it would announce our placement in the top 3 in the annual Best Places to Work competition, where we have placed consecutively for the past 16 years. We wouldn’t know our official placement until the day before the release of the video, so we had to create multiple endings for the video.

-Naturally, our first thought was to shoot 'on location' in the Metaverse.

We believe in the promise of VR and its strong benefits for applications with specific use cases: requiring simulation, changing place or time, interacting with a realistic 3D space or object, making education more real, or just plain gamification and entertainment. We believe even more strongly in the opportunities for Augmented Reality and the possibilities created by the integration of the real, existing digital, and future virtual worlds. We chose to produce a video that integrates IRL (in Real Life) and IVL (in Virtual Life) in an interesting way.

Along the way we gained valuable insights into Metaverse development, integration and events, that you can only earn by getting your virtual hands dirty.

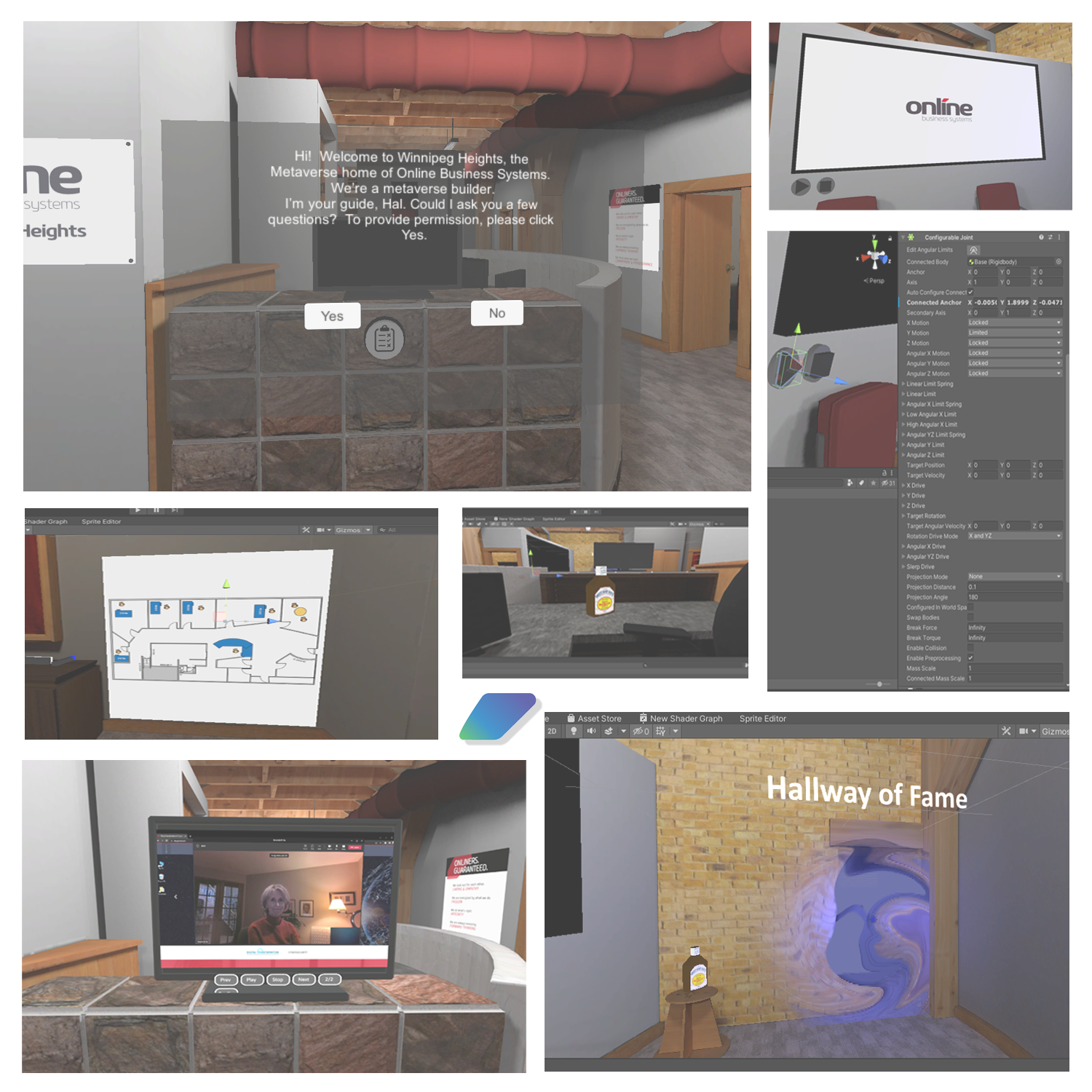

Through our Innovation Lab projects we are identifying and developing new skills that will be required in every role of our organization. For the announcement video, we put our new design and development skills to work to build a place we call Winnipeg Heights.

"WINNIPEG HEIGHTS"

We chose to build a very realistic rendition, a digital twin/replica if you will, of our Winnipeg headquarters that we named Winnipeg Heights to reflect its cloud deployment. Public visitors to an early prototype in Microsoft AltspaceVR remarked that it did not show imagination, as it resembled our real office and did not include the fantasy elements they expected.

-But a fantasy world was not what we wanted.

We wanted visitors to feel a sense of familiarity and home, augmented by new capabilities.

"Virtual reality is a medium, a means by which humans can share ideas and experiences." -Alan B. Craig

After all, this is the promise of new technologies – to amplify human ingenuity. When you see co-worker avatars walking down the hallways of what visually appears to be your real office, it's a very different experience and displays the power of VR. Online Clients who have visited the space immediately think of use cases within their own companies.

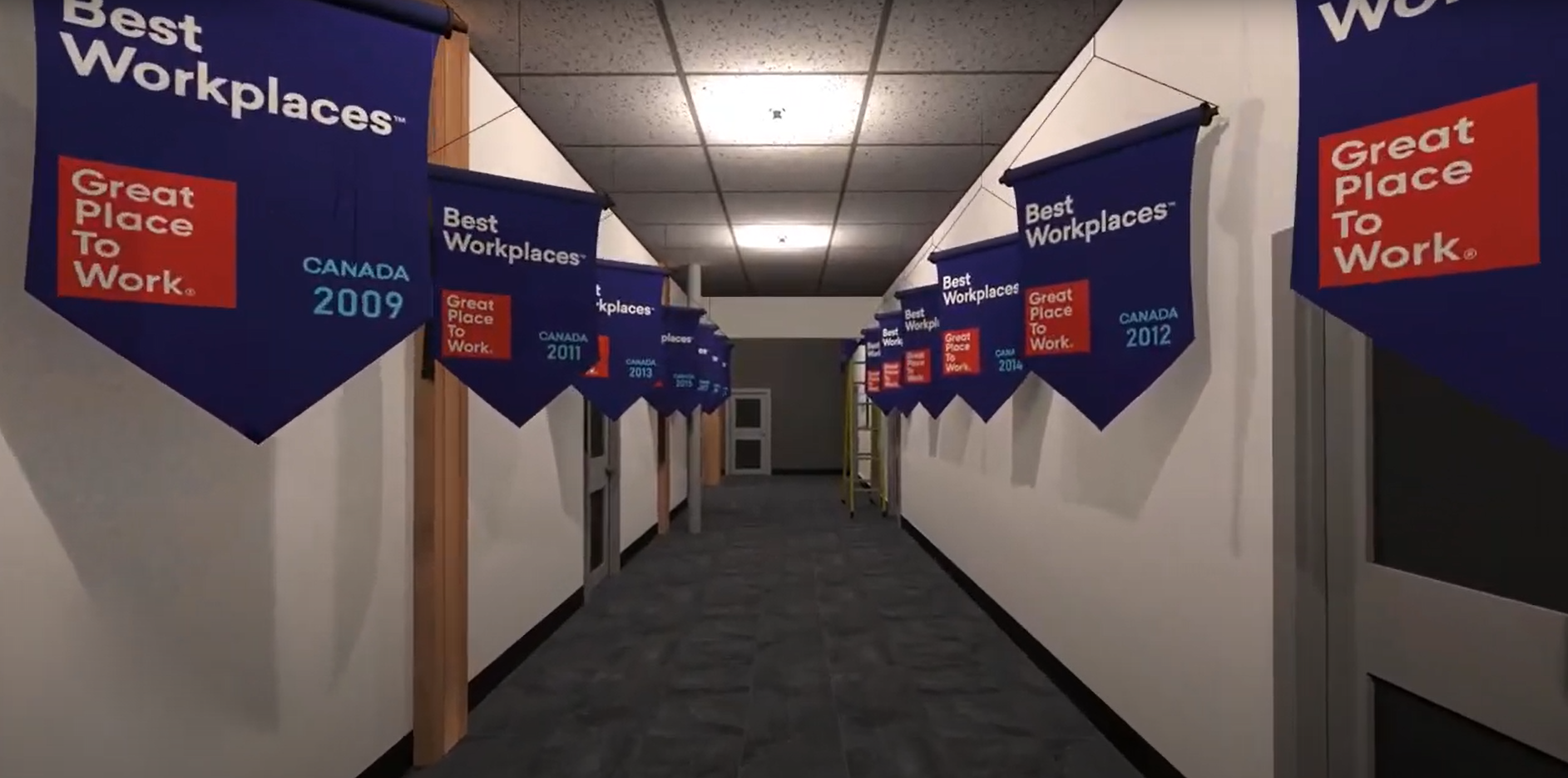

We added a new hallway displaying our Great Place to Work banners for the past 15 years, and a ladder to put up the 16th. But of course, you couldn’t see what was on that banner, until the day of the award.

What Platforms can be Used When Designing These VR Spaces?

BLENDER & UNITY

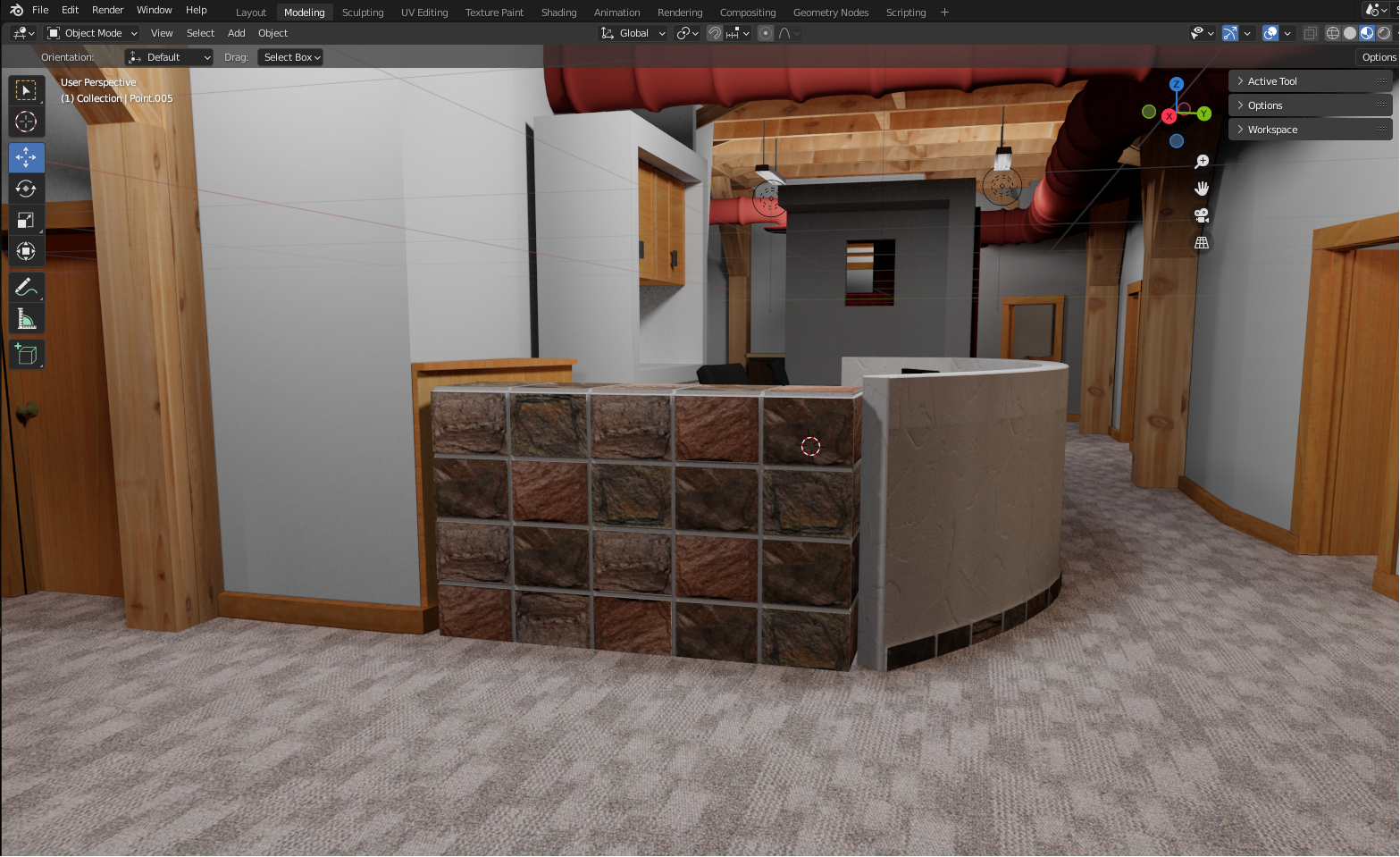

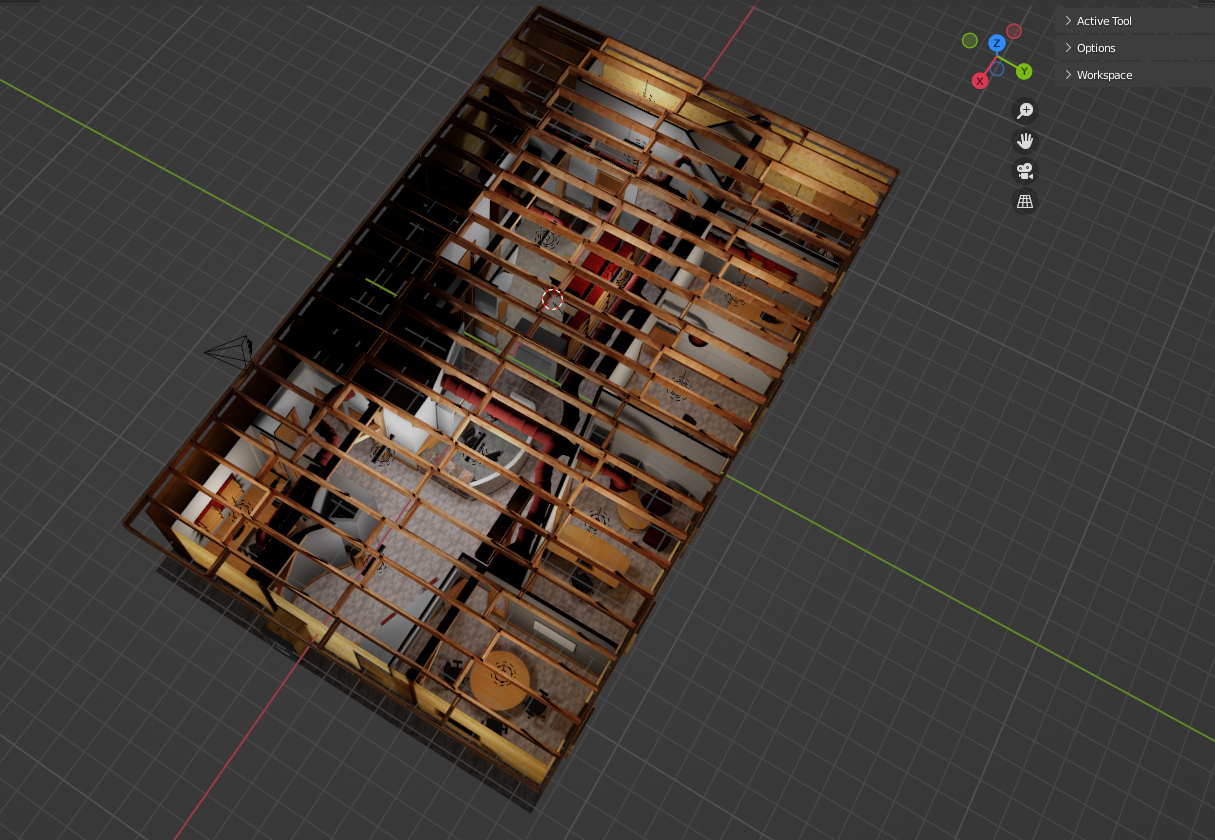

We created a 3D model of the space in Blender using construction drawings and photographs. For another Lab project we were able to obtain a 3D CAD tool model for a building we virtualized, which speeded up the process. In this case all we had available was old-school blueprints and photos. We tried photogrammetry techniques that can convert photos to 3D models, but the space was too complex. For quality, there is no substitute to modeling the space by hand using tools such as Blender.

There is an abundance of 3D modelling software on the market, but the perpetually free and open source nature of Blender made it a good choice for this project. While slightly unintuitive to start, it's hot key-based workflow is very efficient, once you are over the learning curve. While the team had background in 3D development using other tools such as 3ds Max, Blender was unfamiliar, and the enterprise of creating this space was as much a crash course on the program, as it was a desire to create a finished product.

The model started with floor plans for the building and a collection of images taken around the office. From there, a skeleton of the floor, walls, and doorways could be made using basic operations in Blender.

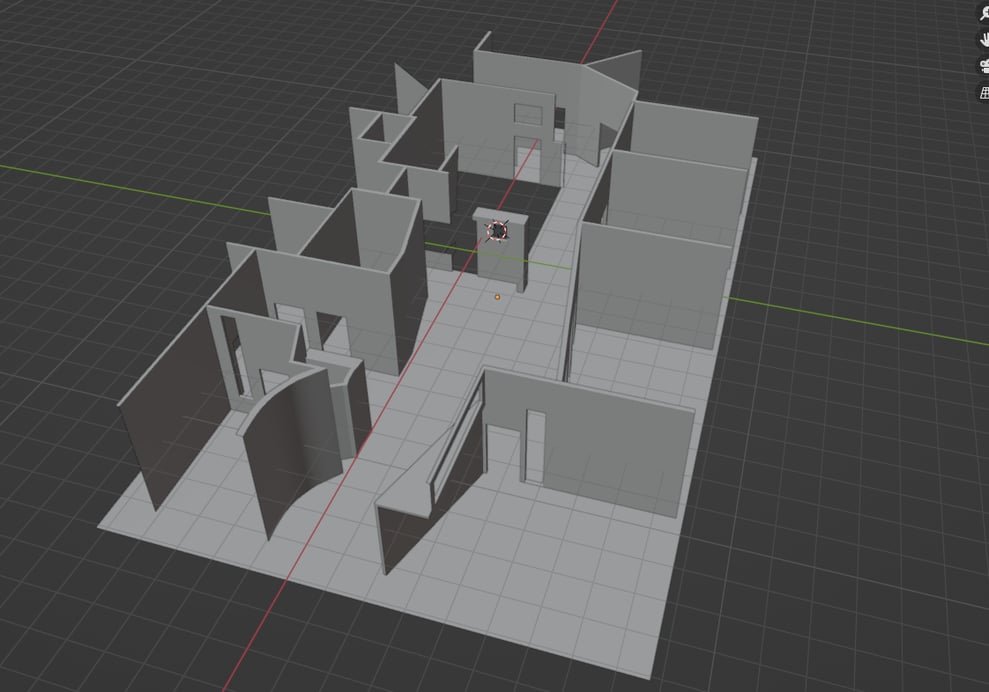

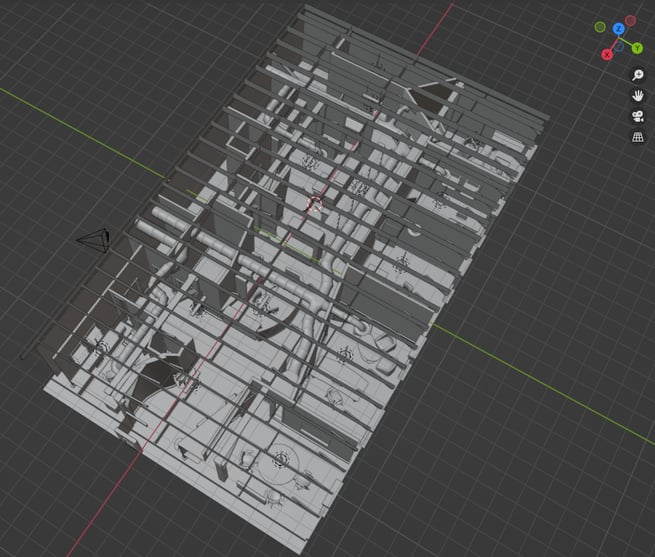

Below is an image of the Winnipeg Heights space after rough framing of walls and openings.

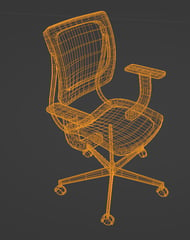

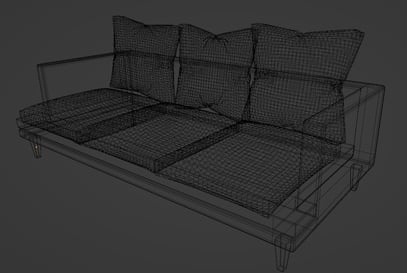

From here, custom assets were made to populate the space. To maximize learning, all assets were made from scratch in Blender. While this is not a scalable approach, invaluable techniques were learned in the process, and our reusable 3D object library was enriched.

|

|

|

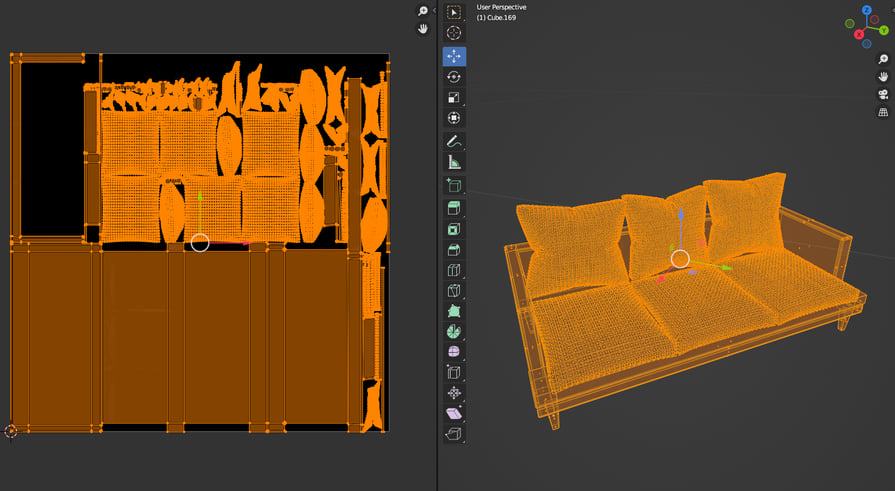

These chairs & couch are examples of the custom assets that were made for this project. They involved more sophisticated techniques such as hard surface modelling, sculpting, and cloth physics to name a few.

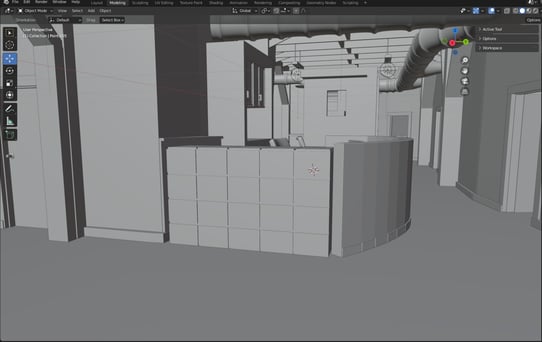

Here, the space is now populated with various assets and ceiling details.

The mesh model is a good start, but to add realism, all surfaces require textures and lighting. The reference images were used to find textures online, and through a process called UV unwrapping, where a 3D object is laid flat so that a image can be mapped to it, textures were added to each object in the scene.

Here is an example of the UV Unwrap process:

Once the textures were added to the space, it started to look more familiar, but something was still missing...

THE IMPORTANCE OF LIGHTING

When it comes to the realism of a scene, lighting is arguably more important than textures. Realistic lighting gives your brain cues that what you are looking at is real. In Blender, we used cycles to simulate global illumination, which includes bounce lighting that allows the color of surfaces to bleed into each other and create an aesthetic, realistic look.

Unfortunately, this lighting is very expensive so it cannot be used directly. To make this work, the lighting information had to be baked into the textures in a process aptly named “light baking”. This included using the cycles renderer to remove denoising (which caused artifacts at the edges of the mesh) and setting the noise threshold and samples set.

Using the cycles renderer, we achieved the best results by removing denoising (which caused artifacts at the edges of the mesh) and setting the noise threshold to 0.01, with the samples set to variable between 0 to 4096. These are fairly intensive settings, and for 2048x2048 textures the bake time per texture was about 3 minutes on a Nvidia 3080 GPU.

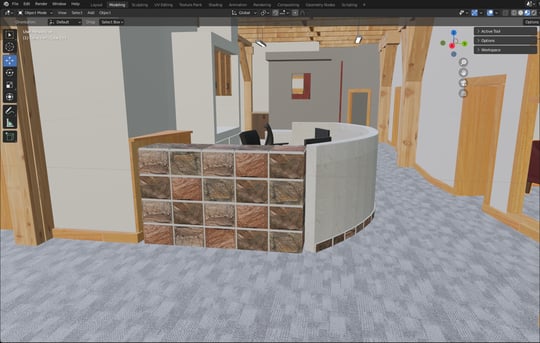

There are roughly 50 baked texture atlases used in the model. Here is the result of this process:

As you can see, the scene now has more depth, the textures fit together better, and the “cartoony” feel is gone.

From here, we had a model that could be ported to a variety of different VR platforms via FBX and GLB format, and into Unity for more advanced development.

UNITY

Unity, originally a gaming engine, is now an IDE we use to build a variety of 3D applications, and is our current tool of choice for creating interactable 3D environments. Unity offers build support for many platforms, including WebXR, integration with Microsoft AltspaceVR, and export options we have used for deployment to platforms such as Spatial.io and Mozilla Hubs. The process for preparing a model for deployment is fairly simple, and includes adding mesh colliders to all collidable meshes in the model. However, all platform have model complexity constraints, such as number of objects, number of polygons, texture size, etc. For Altspace, we enabled flat lighting to the scene, so that lighting information only came from the baked textures. For Spatial.io and Mozilla Hubs, the baked textures exceeded the maximum file size, so regular textures and simple real-time lighting were used.

The process for building the WebXR space was more involved, but it allowed us the full range of capabilities available in Unity, a space we could host ourselves, and a space anybody can view using a standard Web 2.0 hyperlink. Web 2.0 refers to websites that emphasize user-generated content, ease of use, participatory culture and interoperability for end users.

To overcome the minimal support for WebXR in Unity, we used third-party frameworks to configure the VR capabilities in the browser. We tested third-party physics frameworks but they all performed poorly, which forced us to develop our own custom physics package using rigidbody physics and PID controllers.

The WebXR template and physics package are important assets in our toolkit, making the rest of the development simple Unity development not specific to VR. This is important, as it allows us to use a single Unity project and deploy it to VR or to a flatland browser, a critical design goal.

INTERACTABLES

A limitation of most proprietary VR platforms is the scarcity of interactables. An interactable is a functional object you can use, just like you do IRL. You would think that the vendor platforms would all provide extensive kits of useful things, but that’s not the case. The ability to build an interactable is a highly sought-after skill on platforms such as AltspaceVR. We built what is a growing library of interactables that we can use on any project:

- Flat panel display screen for video and presentations

- Computer monitor with simulated display via images and video

- Working whiteboard with markers and eraser

- Teleprompter for video shoots

- Visitor survey

- AI conversational bot interface

- Teleportation portal for interworld travel

The pilot build of the Winnipeg Heights space took about one week, from concept to running in Altspace. Populating the space with usable objects took a couple more weeks. Our goal is to build virtual worlds in a platform-agnostic way. The same Winnipeg Heights model currently exists on four platforms: Microsoft AltspaceVR, Spatial.io, Mozilla Hubs, and a WebXR implementation in a desktop browser.

You can visit Winnipeg Heights in your browser by clicking this link.

Navigate this space by using your W, A, S and D keys.

https://obsverse.s3.ca-central-1.amazonaws.com/winnipeg-heights-2d/version_2.0/index.html

Or, if you use AltspaceVR in a headset or on your desktop, visit code: DFD158.

GRAVITY FRAMEWORK

It was immediately apparent to us that without the support of backend services, our experience was just a very fancy UI. As interesting as a Metaverse experience would be, we couldn’t fulfill business objectives without interconnections to our existing digital world, for fulfillment IRL.

"This is our forte as a company - the ability to craft sophisticated and challenging experiences from end-to-end, including all the heavy lifting on the backend."

- Larry Skelly, Principal Consultant, Technical Fellow

We named our framework “Gravity” as it tethers our Metaverse place to the real world and makes it relevant here in real life. Gravity is a microservice-architecture backend that manages virtual object state, captures events, and provides important services. For example, we use it to integrate backend AI services, enabling us to serve a visitor using an Azure Bot service running in our corporate cloud. It is currently running in Azure, but it is designed abstractly so that the services can easily be ported to AWS or GC.

We take a very abstract view of assets and objects in a Metaverse place. IT Asset Management must evolve to manage a huge variety of virtual objects that are critical to conducting business in a virtual world. No longer just servers, or even cloud services… but virtual flat display panels, billboards, buses with advertising, spaces, and digital assistants. For data storage we chose CosmosDB, a NoSQL database, due to its characteristic of being schema-less. As our world evolves, we can handle new objects, attributes, and events without being constrained by a predefined schema.

Winnipeg Heights is a hybrid cloud experience, deployed to multiple Metaverse platforms, currently with services in both Microsoft Azure and AWS.

ON LOCATION IN WINNIPEG HEIGHTS

Shooting a storyboard that integrated real life, simulated real life, and virtual life was a learning experience. One of our key learnings is that the creative side of the Metaverse is as big as, or bigger than, the technical challenges. There are a number of different ways you can record a video, but for authenticity that best represented that virtual space and the real people behind our avatars, we chose to have our entire cast and crew in the AltspaceVR virtual world.

Acting in a headset is challenging because you are confined to the virtual world. The team built a virtual teleprompter, fed by the script, that we could position anywhere we needed in the virtual world.

Our cameraman then used mixed reality to their advantage and recorded the scenes via avatar using a 2D desktop format.

As more use cases present themselves for filming in the metaverse, articles like this one will help creators determine the best methods for collecting audio and visual elements.

EDITING

After the scenes were recorded in both the Metaverse and real-world, it was simply a matter of piecing it all together while layering in additional elements to help tell the story. The objective of the video was to communicate and celebrate our recognition as one of Canada’s top employers, but it was important to keep it light and showcase the Online brand. The combination of callouts, sound effects, music, and timing brought the production to life and kept the message fun and engaging. Integrating a real world scene allowed us to dynamically include our final placement in a video released a couple hours after announcement.

THE FINAL CUT

The final video integrates footage shot in the virtual world, in real life, and real life simulated in the virtual world. We hope you enjoy it.

Winnipeg Heights is a great corporate asset, our newest office location, and is a foundation for new initiatives providing a Total Experience for Clients, employees, and future Onliners.

How can Organizations Advance Their Current Service Offerings? UpDimension™ xDLC

We have a hypothesis, captured in a new service that we have trademarked, to encapsulate the impact and process of migrating our client’s existing 2D applications to the 3D world.

That service is UpDimension™ xDLC.

-The vast majority of consumers favour experiences over transactions.

To remain competitive our clients must UpDimension™ their existing 2D “flatland” digital applications to provide 3D experiences. In order to do this we all need to UpDimension™ our game with new skills. Think about what a User Experience (UX) designer does today to design 2D screens. Now imagine the challenge of UX design translated to the new world; its part architect, 3D modeler, interior designer, colour consultant, real estate stager, lighting rigger, animator, spatial audio specialist, wardrobe department, avatar psychologist, non-verbal communication expert, etc.

If you or your company are looking into what the metaverse might mean to your industry, we are here to help. Let’s start a conversation.

About Online’s Innovation Lab:

Online’s Innovation Lab connects our Clients, and the difficult business problems they are trying to solve,

with emerging technology.

Our mission is to work with Clients to explore the possibilities of emerging technology and work with Clients to identify substantive changes for growth, uncover profitability, and improve customer satisfaction –

by innovating on the edges.

Submit a Comment