The final entry of a three-part blog on building secure solutions using Online’s Secure Solution Delivery Life Cycle (SSDLC).

In Part One of this blog, we looked at the reasons why we need to build security into applications from the start. In Part Two we learned the fundamental design principles we need to apply in order to build solutions that are secure by design. Now how do we apply these principles throughout our application delivery life cycle? The final part of this series introduces important life cycle activities and techniques from Online’s Secure Solution Delivery Life Cycle (SSDLC).

Secure Solution Delivery Life Cycle

Online’s SSDLC is a framework for building and delivering secure solutions - not for developing secure software. Many solutions that are implemented have little-to-no custom code but may pose as great or greater security risk than those that do. The SSDLC supports all life cycle approaches and is generic, by design, so that it can be applied to almost any IT initiative.

It is life cycle-independent. It provides a set of Activities, Artifacts, Roles and Techniques that can be applied to projects being completed for clients or internally. It provides a body of knowledge for our project teams to complete full life cycle projects, and for our practitioners to reference when completing staff augmentation engagements.

It is technology-agnostic. It does not presume that any particular architectural style, type of application, or technology environment is being used. The core framework encourages organizations to focus on the capabilities required for business success and the protection of information, irrespective of the technologies being used. In addition, it provides Technology Modules that provide additional guidance for implementing secure solutions using technologies we commonly employ.

It is security policy-neutral. It does not reflect any specific organizational security policy. Part of the tailoring process when applying the SSDLC to an initiative is to incorporate security policy specifics.

Now let’s look at important activities across the stages of the life cycle.

Secure Design Starts at Inception

A good life cycle approach is iterative, cycling through basic activities at each stage –requirements, analysis, design, and potentially construction... it should also iterate through levels of security design. You cannot implement a secure solution if the design is fundamentally flawed. And design starts in Inception, at the first point where the solution starts to take shape as a “thing.”

During Inception, the vision for the business solution first comes together and we can take an initial look at the solution as a component, a new IT asset, and its associated Data Classification. We develop a concept for how users and other systems will interact with the new application and we can complete the first iteration of a Qualitative Risk Assessment based on knowledge of the user community and how we want to interact with them.

A good life cycle approach will add a Security Advisor to the project at the start of Inception – someone who is fluent in our security principles, knows how/when to apply them in the solution delivery life cycle, and is experienced in identifying security threats and implementing safeguards. The Security Advisor mentors the team in the application of the techniques that follow.

The Data Classification technique uses the organization’s Data Classification policy to assign a classification to the information assets that the solution will manage. This in turn identifies the organization’s baseline set of security controls required to protect data with that classification. For example, the policy may dictate the network zone of trust where the data must be stored when at rest, and that standard safeguards are required on each server (e.g. Host Intrusion Detection, Filer Integrity Management, Data Loss Prevention).

Qualitative Risk Assessment is a scenario-based technique that identifies potential threats and the potential safeguards that protect against the threat, estimates the loss potential, and ranks threats and safeguards on a scale (numeric or low/medium/high). Design activities later in the life cycle work to mitigate the identified threats.

Secure Design in Requirements Definition

Design is driven by requirements and it is becoming increasingly important to understand the full breadth of requirements for security.

Functional Requirements for user interaction and system integration identify important potential attack vectors for the solution that need to be considered in subsequent Threat Modeling and Attack Surface Analysis and Reduction. Functionality that implements security features is also specified.

The Non-Functional Requirements capture the engineering considerations that affect security design: authentication, access control, audit and logging, accounting, reliability, availability and recovery from failure, disaster recovery, etc. Maintainability is also a traditional design goal; extending this to the security domain we need to address our “Design for Adaptability” design principle, providing the ability to continuously maintain the security of our solution after deployment (e.g. secure updates, patching without service disruptions, update of system secrets, ability to disable or isolate a compromised component in response to a threat, upgrading a cipher suite, etc.) without adversely affecting business users.

Secure Design in Architecture and Design

Architecture is about the next iterative of analysis and design activities -considering the domain requirements and architecturally significant features in order to develop a blueprint for the solution. Many of our basic security principles are applied during the architecture stage – Economy of Mechanism, Minimize Common Mechanisms, Complete Mediation, etc. One of the key techniques employed in Architecture and Design is Attack Surface Analysis and Reduction. The “Attack Surface” of an application is the aggregate of the infrastructure, code, interfaces, protocols and services that are available to entities that interact with the system – users and other applications and processes. The exposed surface provides attackers with opportunities to exploit vulnerabilities in any component that makes up that surface. The Attack Surface extends beyond the solution itself, to the development, maintenance, and operational environments that are required to support the solution and which could provide key insights into methods for attacking the solution - and it also includes the cyber supply chain for the solution, which recent history has shown is often an Achilles heel.

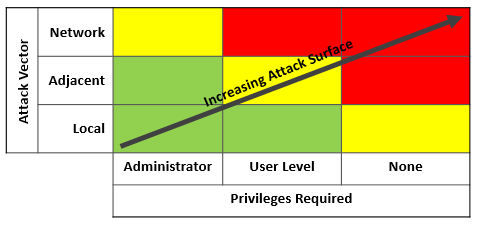

Of particular interest is the Attack Surface which is exposed to two classes of entities: entities that can interact with the system remotely, and entities that can interact without authentication. Note that the NIST Common Vulnerability Scoring System explicitly considers these two characteristics. Vulnerabilities that can be exploited remotely (the newly renamed “Attack Vector” metric) and/or without authentication (the new “Privileges Required” metric) score higher in the CVSS vulnerability risk rating.

Attack Surface Analysis (ASA) is the process of identifying all of the distinct interfaces within the solution in terms of protocols and services, and the code that executes behind them. Attack Surface Reduction (ASR) aims to reduce the amount of code exposed through these interfaces. A core tenet of ASR is that there is a non-zero probability that the code will have a vulnerability, either now or in the future. Therefore minimizing this code and eliminating anything superfluous, will reduce risk.

To reduce the Attack Surface, apply the principle of Least Functionality:

- Reduce the amount of code that executes by default. This can be done by minimizing the installation options and disabling unnecessary features of the component.

- Restrict the scope of who can access the code (administrators only, any authenticated user, or anonymous) and from where they can access it (remotely, remote from a specific subnet, local to the site, or local to the server).

- Restrict the set of identities that can access the code, by determining whether a feature is required by the majority of users, or whether a feature can be decomposed and each sub-feature more granularly secured to a smaller set of users

- Reduce the privilege level under which the code runs

- Consider operating system specific settings that further constrain capabilities

There is more to it as there are multiple dimensions to the Attack Surface, including its area, the duration for which it is exposed, and the size of the aperture through which it can be manipulated, but these nuances are beyond the scope of this blog. If you would like more information on this important technique please leave a comment below.

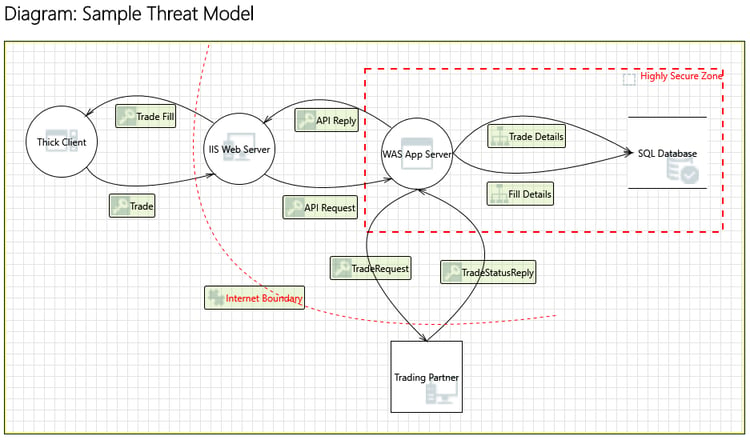

Once the solution design is nearing completion, a set of Threat Models should be developed to analyze the solution design to identify threats and select mitigations. A Threat Model is a Data Flow Diagram that illustrates a usage scenario by decomposing the system into its components and external dependencies and showing the flow of data between them. A trust boundary delineates data moving from a low to a high privilege zone of the application. Tools such as Microsoft Threat Modeler apply standard taxonomies (e.g. Microsoft STRIDE) to analyze the models to identify particular types of threats so that you can implement mitigations.

Secure Design in Implementation

Continue to apply the Secure Design principles we reviewed during the detailed design and coding or configuring of the application software and infrastructure. Use the Attack Surface Reduction technique during the detailed design and coding of any software components, and consider the Threat Modeling mitigations during Unit Testing.

If configuring software instead of building, minimize the attack surface through judicious configuration.

Secure Design in Quality Assurance

Although Secure Design, by definition, is a set of design activities that occur in the earlier stages of the life cycle, the design activities identify responsibilities to be fulfilled later in the life cycle. Ensure that the Threat Models developed during solution design drive scenarios to be exercised by System Testing and Penetration Testing. Test your reduced attack surface. Verify that the design has fulfilled all Non-Functional Requirements.

Secure Design in Deployment

Revisit the solution’s attack surface and further reduce it through configuration options. “Harden” the solution by disabling unnecessary component features, unused protocols and services, and unnecessary permissions.

Summary

In this three-part blog we have reviewed the importance of baking security into your solution from the start, as a set of design principles that you can apply, and a sampling of activities from Online’s Secure Solution Delivery Life Cycle that applies them at each stage of the process. We’d love to continue the conversation in more detail and hear about your experiences. To continue the conversation, feel free to leave a comment below.

About Larry Skelly

Larry is a TOGAF and ITIL-certified Enterprise Architect in our Risk, Security and Privacy practice, with more than ten years of experience writing and teaching design methodologies and thirty years of experience with architecture and hands-on development. Larry understands the need to infuse security into everything that we do. He was also a primary contributor to Online’s Security Integration Framework (SIF) and Secure Solution Delivery Life Cycle (SSDLC), an approach for implementing secure IT solutions. To learn more about Online's Risk, Security and Privacy practice, click here.

Submit a Comment