Kevin Guenther

Organizations of all kinds are increasingly adopting user centered Design Thinking practices instead of sitting around boardroom tables with their peers, dreaming up the next big feature or digital product they think their users need. But depending on your company culture and organizational structure, you may still be finding it challenging to break the “grand assumption” habit.

Whether you’re designing something new or adding some much-needed features to an existing product, every digital experience initiative should meet the trifecta goals of Design Thinking:

- Feasibility

- Viability

- Desirability

But to discover and measure whether you have met these goals requires a lot of qualitative research, a lean design/build process, and actionable quantitative data – and to do that right means we have to avoid making “grand assumptions” at all costs.

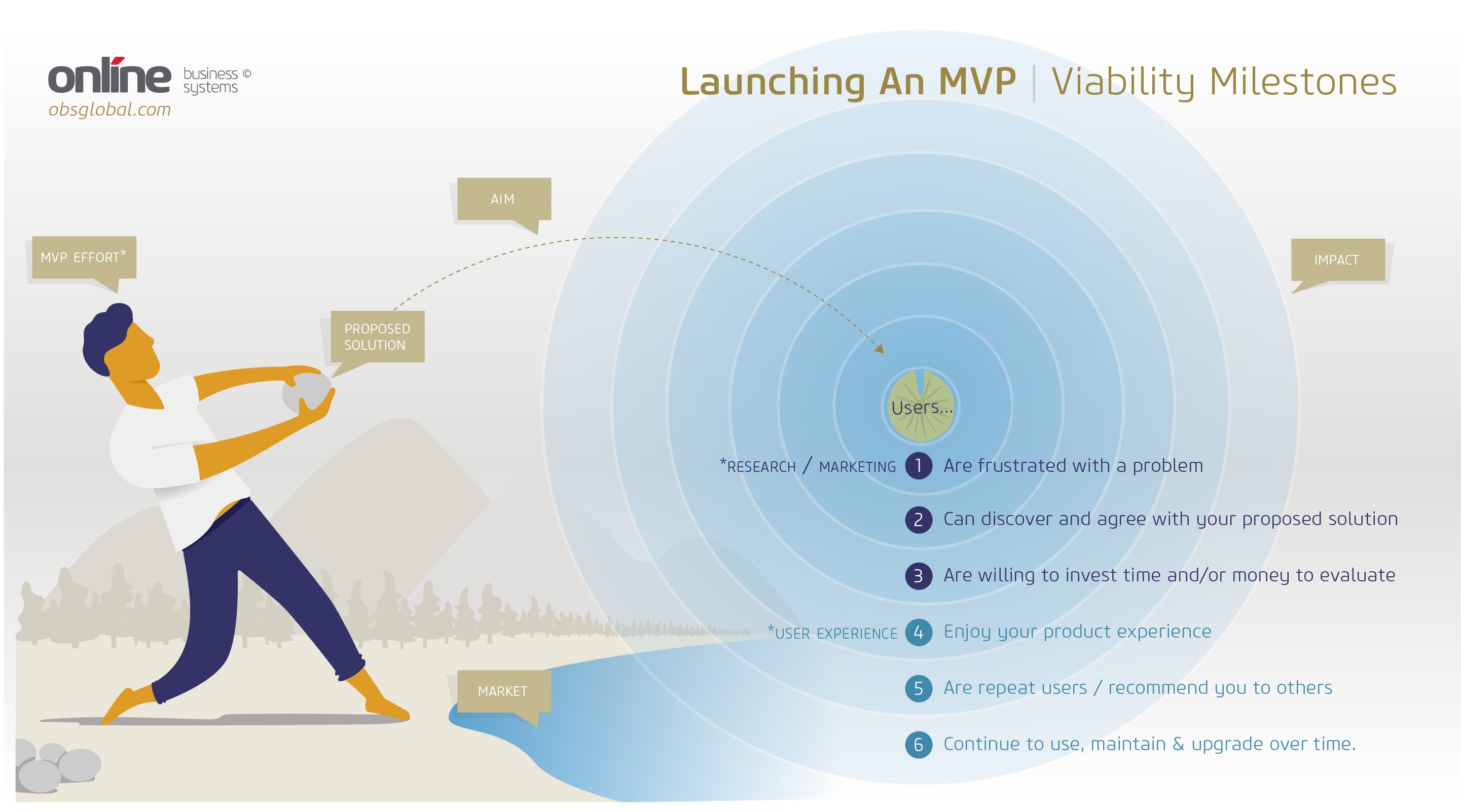

The infographic above is based on an analogy I like to use to explain what it feels like when you plan and execute a project that gets it right. The reason I like this analogy so much is because it does a great job of capturing all the feelings of preparation, practice, and eventual success I associate with lean digital product design.

Depending on the stone (your proposed solution), the effort you put into picking the right stone (research and planning), throwing the stone (testing and deployment), aiming it (refining your message), and targeting your lily pad (audience), you’ll get a variety of results ranging from a disappointing plop—to a satisfying splash (happy users and profitability)!

Part 1. Selecting and Launching the Stone

MVP Effort

Balanced application of resources and process

There is already a lot out there about building Minimum Viable Products (MVPs), so I’m not going to belabor the subject here. The most valuable thing about building MVPs as it relates to the infographic above however is understanding how to utilize them for quick-to-market-turn-around and immediate feedback. This is arguably the most important step in setting up an anti-assumption project system in your company. The other thing to focus on as it relates to ‘Effort’ in the infographic is that the words Minimum and Viable are meant to be used together. Too many business owners and Product Managers focus on the Minimum part. While too many designers and developers focus on the Viable part.

Minimum and Viable are not meant to be mutually exclusive concepts, they are about finding balance. Unfortunately, without understanding what your existing resources, timelines, and product roadmap are, I can’t tell you how to strike that balance. That’s a question for your Product Manager.

What I can say is that your MVP should follow the following three points, every single time you are building one:

- Only build the functions that will help test whether your product / feature is viable (based on the milestones above). Ignore any temptation to add non-critical features.

- You absolutely need to build-in an analytics platform to measure usability, churn, retention, and conversions. This may seem obvious, but it’s always surprising to me how many Product Managers forget to plan for this.

- Don’t ignore your brand perception. Building surprise and delight into the fabric of your products will create emotional connections that will push you above competitors if you manage to hit all of the milestones above—and soften the blow if you don’t.

Proposed Solution

How well you understand your users and the problem they need solved.

Closely related to the effort you put into developing your MVP is how you go about choosing the features to include in it. Or, the problem you think you’re solving for your users.

When it comes to these decisions, there is a big difference between launching a feature within a live-product vs. launching an entirely new product. Assuming the live product is gathering decent metrics and regularly surveying users (or even just logging support tickets), you’re starting with user insights that a new product likely won’t have. Regardless, in a user-centered design process, it’s absolutely critical that you start with sound market analysis and user data before you, “select your stone” (decide what your MVP looks like). That means ensuring your organizational goals and resources are properly aligned to a solution that users actually need.

A few tools and techniques that product teams I’ve worked with have employed in the past are TAM, SAM, and SOM reports and Innovation Engineering Yellow Cards. These tools are great for business sided decision making - like whether or not you can even afford to make the product based on its potential for returning a profit.

To receive more holistic user-centered feedback, I like to employ user surveys, ethnographic research, and ideally Design Sprints before jumping into high-fidelity design and coding.

Ultimately all of these methods and tools are intended to ensure the features you’re building into your MVP are attempting to solve a problem that real users have acknowledged they actually need solved. Of course, that’s not to say that your instincts about your product and users are completely wrong, because let’s face it, you have to start somewhere.

In fact, going with your gut will probably lead you somewhere in the ballpark of 50% to 90% of the right path. But without a process in place to properly validate your assumptions, there is often no way of knowing where you really are in that spectrum until it’s too late.

Aim

Your ability to identify and target users

So now that you’ve built the essential features, you’re ready to launch your stone at the lily pad... but wait… which lily pad was it again?

Getting feedback from a handful of survey respondents or waiting list sign-ups from a few hundred potential users is one thing. Having a profitable number of users discover your product and choose you over a competitor in the real world is a much bigger challenge.

That’s why before you send that stone hurtling through the air, you’ll want to make sure you’ve properly considered your communication and marketing strategy and how you plan to gather feedback and respond to the results.

Regardless of how you’ll spread the message — social media, email marketing, paid advertising, etc… — knowing where your users are coming from and which channels are the most effective is equally as important as knowing whether or not they are able to use your product, convert, and remain loyal.

Some good tools for tracking the flow of users at the top of the funnel (and for those with a more robust marketing budget) are HubSpot or Marketo. They’re excellent soup-to-nuts products for almost all of your inbound marketing needs and CRM tracking.

For those on a tighter budget, check-out Adjust for measuring inbound marketing on mobile-based platforms, and MailChimp or SendGrid for email marketing and SaaS notification tools. Of course, Google Analytics is another affordable option for web-based products and AdWords campaigns. Facebook and Twitter for Business also have their own ad management and analytics dashboards as well.

With all of the issues above adequately addressed, you should finally be ready to launch that stone. The preparation may seem like a lot of work, but in the end these techniques produce results much faster and usually much more successfully than the old waterfall method of setting up requirements, detailed creative briefs and strict budgets.

In Part 2 of this post, I’ll go into a little more detail about viability milestones during the post-launch impact stage. This includes how to track the milestone's using your metrics system and what warning signs to look for that could indicate you’re not hitting all six of them.

Any questions or comments so far? Feel free to leave a comment below!

Submit a Comment